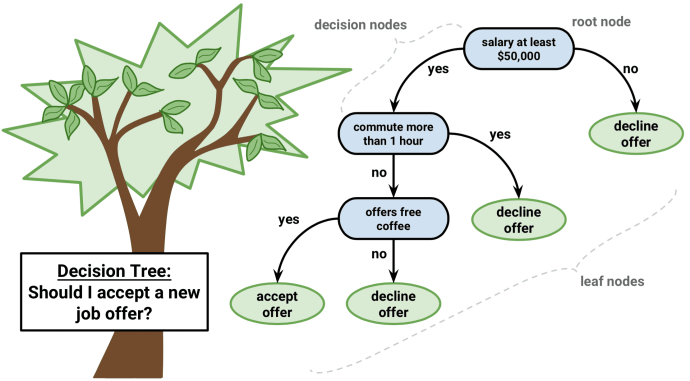

Introduction

Creativity is something human, isn’t it? I mean, when you think of creativity your mind floats to some amazing pieces of music either with interesting beats you’d never thought about or inspired compositions of instruments that just “work” together. Or maybe you think about art, in all its varieties, and its ability to convey original ideas in unique ways; maybe it’s the particular style of the artist or the scene or idea they are depicting that draws you in and make you contemplate their motivations or thought processes. But there’s also poetry, and writing in general, that convey something deep about the human condition. These most literally tell a story, although arguably all of the aforementioned art forms do, centred on an idea or collections of ideas – this is something that surely requires imagination and creativity? All of these things are products of the human mind; its seemingly infinite capacity for exploration of ideas based on the way it processes and learns from the world around itself. Could an AI ever recreate any of these? Surely not – all these ideas are incredibly complicated and there is no “algorithm” for a successful piece of art or music or book, something about them relies on human connection and understanding. But if we strip away the fact that an author was a human, the art itself is still compelling (and whether or not that is attributable to the human artist actually being human is another debate) for a multitude of reasons. You can’t really explain what these reasons are – its something indescribable. And people react differently, indeed art in all of its forms is subjective, but the fact that it spawned from a sense of individual thought or moment of creativity makes it special, and maybe that’s how we convince ourselves that an AI could never really do something creative. Like I said earlier, creativity is something human, isn’t it?

Project Magenta / Work by Google

Well, Google’s Project Magenta addresses this very question. The first tangible product of their work can be seen in this video. It learned to play the piano from just a few notes, created a beat and picked suitable instruments to ensemble together to create its first composition. Primitive? Yes. Creative? I’d argue also yes, but I see also why this early stage piece is not worthy of such an accolade just yet. It captures the right things, though. This AI learned from the world around it (albeit the only world it “knew” was musical instruments, how they sound and how they are put together – humans have a much wider range of stimuli to base art upon) and put these sounds together in a unique way to create something. I would advise having a play around with some of the Demos on Project Magenta – they’re really interesting and a showcase of how far AI has come! I could talk more about this, but I want to move on to something else in this blog.

Well, Google’s Project Magenta addresses this very question. The first tangible product of their work can be seen in this video. It learned to play the piano from just a few notes, created a beat and picked suitable instruments to ensemble together to create its first composition. Primitive? Yes. Creative? I’d argue also yes, but I see also why this early stage piece is not worthy of such an accolade just yet. It captures the right things, though. This AI learned from the world around it (albeit the only world it “knew” was musical instruments, how they sound and how they are put together – humans have a much wider range of stimuli to base art upon) and put these sounds together in a unique way to create something. I would advise having a play around with some of the Demos on Project Magenta – they’re really interesting and a showcase of how far AI has come! I could talk more about this, but I want to move on to something else in this blog.

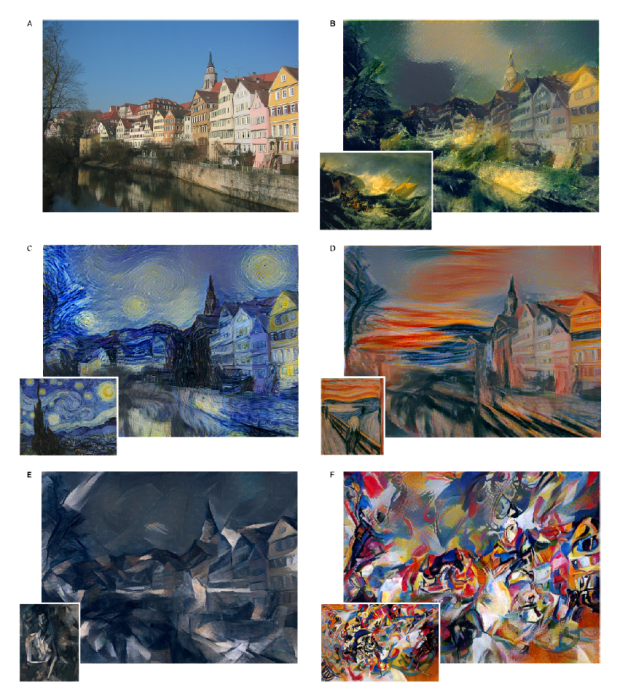

There’s also a nice article on a Deep Neural Network that was able to mimic the style of artists. A picture of their work can be seen below. These art pieces could also be considered creative, but I think the fact that they so closely mimic other work means they are anything but. Still, though, they in a sense “understand the style” of an artist and are able to recreate pictures “in their image”. It’s certainly very interesting, but I would argue that this doesn’t have that moment of inspiration or uniqueness that would qualify these pieces to be truly creative.

(Source: ARXIV/A NEURAL ALGORITHM OF ARTISTIC STYLE/GATYS, ET AL.)

(Source: ARXIV/A NEURAL ALGORITHM OF ARTISTIC STYLE/GATYS, ET AL.)

AlphaGo

I want to use this blog post to talk about one moment in particular that certainly peaked my interest in Artificial Intelligence and Machine Learning since it’s a truly compelling story. That is the story of Deepmind’s AlphaGo. There is a rich story I want to convey here, that directly relates to this blog post, but first I want to explain a little context…

Go is a game that was invented in China more than 2,500 years ago and is believed to be the oldest board game continuously played to the present day. There are tens of millions of players worldwide, and the game is very popular in East Asia. In a lot of cultures, being good at Go is considered a form of vast intelligence; Go was considered one of the four essential arts of the cultured aristochratic Chinese scholars in antiquity. It’s an incredibly popular game even today, with those who are good at the game like celebrities in their respective countries. The rules are very simple, but the game is complex. The objective of the game is to capture the most territory on the board and you and your opponent take turns in placing white and black “stones” on points of the board. Once placed on the board, stones may not be moved, but stones are removed from the board when “captured” (when it is surrounded completely by stones of the opposite colour). The game proceeds until neither player wishes to make another move; the game has no set ending conditions beyond this. When a game concludes, the territory is counted along with captured stones and komi (points added to the score of the player with the white stones as compensation for playing second, which is normally either 6.5 or 7.5 depending on the rule-set being used) to determine the winner. Players may also choose to resign. This sounds like a very simple game, and it is! But it has always been the ultimate goal for artificially intelligent systems to master this game, purely due to the number of possibilities of moves there are at every given point. There are more possible games of Go than there are atoms in our universe – a truly extraordinary amount (the lower bound on the number of legal board positions in Go has been estimated to be 2 x 10170). Many expected we were decades away from this dream. It is not the first time that AI systems have mastered games, but major successed previously included systems like IBM Deep Blue, which notably beat Kasparov at Chess in 1997. This was a remarkable achievement, and the first time a computer had beaten a human grandmaster at Chess. Go, however, is much much harder. IBM Deep Blue was the product of several experts coming up with clever algorithms to evaluate all possible “good” chess moves at a given position (“brute force search”) and decide which one was best. You can’t do this with Go, there are just too many possibilities.

This sounds like a very simple game, and it is! But it has always been the ultimate goal for artificially intelligent systems to master this game, purely due to the number of possibilities of moves there are at every given point. There are more possible games of Go than there are atoms in our universe – a truly extraordinary amount (the lower bound on the number of legal board positions in Go has been estimated to be 2 x 10170). Many expected we were decades away from this dream. It is not the first time that AI systems have mastered games, but major successed previously included systems like IBM Deep Blue, which notably beat Kasparov at Chess in 1997. This was a remarkable achievement, and the first time a computer had beaten a human grandmaster at Chess. Go, however, is much much harder. IBM Deep Blue was the product of several experts coming up with clever algorithms to evaluate all possible “good” chess moves at a given position (“brute force search”) and decide which one was best. You can’t do this with Go, there are just too many possibilities.

Deepmind revolutionised the way we thought about playing games by introducing Deep Neural Networks applied to Reinforcement Learning. I don’t want to go too much into how it works, because that is a topic for a blog itself, but it roughly combines three main ideas: the policy network, the value network and the Monte Carlo Tree Search (images above). Given a board position, the policy networks provide guidance regarding which action to choose – the output is a probability value for each possible legal move and so actions with higher probability values correspond to actions that have a higher chance of leading to a win. The value network provides an estimate of the value of the current state of the game i.e what is the probability I will ultimately win, given the position I am in now. The Tree Search explores a subset of different game possibilities from the current game state. It can look ~50 steps into the future, but if it is having a hard time deciding it may look up to 200 steps into the future. But how does it understand the concept of value, and get these win probabilities, and know which games to explore? This is the power of Machine Learning. AlphaGo watched thousands and thousands of good amateur human games to learn some of these values. It took this as a basis and then began to play itself millions of times, gradually updating itself and using new versions of itself to play older versions (and if it won most of the time with the newer version, it would assume that this version was “better” at playing Go, so update itself). It gradually began to learn what moves led to success and which ones were doomed to fail. It began to mimic this “human” intuition that all good Go players have. Often, when asked why a Go player played a particular move, they simply answer with “it felt right”. For humans, “it felt right” is based on the fact that the human has previously played thousands of these games, and it tends to know what works out well – it was this concept that the machine was trying to emulate mathematically.

Now you know what Go is, and you know how Deepmind tried to attack the problem, you’re either sat there going wow this is all really complicated or wow that’s actually really smart. Both of those are good responses, but I need to comment at this stage that this had never been done before – the ideas were brand new and no one really expected they would work at this level. Deepmind knew that their algorithm worked on good amateurs as they had played people online before, and they knew it could beat the European Go Champion (Fan Hui, who later became an advisor for the Go project at Deepmind after losing to the system 5-0) but they wanted to challenge the best of the best. Lee Sedol was often regarded as the Best Go player in the world at the time. Arguably, he still is. To date, he has 49 Gold medals including 18 Global Gold medals, so he’s pretty good at Go. He is ranked 9d, which is the best ranking a Go player can receive (by comparison, Fan Hui was 2d). As a result of all of this, no one thought Deepmind would win. Everyone expected Lee Sedol to win 5-0 or at least 4-1. Maybe its something to do with human intellectual arrogance (“how can we possibly be outsmarted by a machine?!”) and yet that’s not what happened…

AlphaGo vs Lee Sedol

The match was streamed worldwide, with millions tuning in. It was played at the Four Seasons Hotel in Seoul over 5 consecutive days. The winner was set to win $1 million, but the match wasn’t about the money – it was man vs machine, who will win in the battle of intellect?

The match was streamed worldwide, with millions tuning in. It was played at the Four Seasons Hotel in Seoul over 5 consecutive days. The winner was set to win $1 million, but the match wasn’t about the money – it was man vs machine, who will win in the battle of intellect?

AlphaGo went on to win Game 1. Lee appeared to be in control throughout much of the match, but AlphaGo gained the advantage in the final 20 minutes and Lee resigned. Lee stated afterwards that he had made a critical error at the beginning of the match; he said that the computer’s strategy in the early part of the game was “excellent” and that the AI had made one unusual move that no human Go player would have made. This was an initial shock, especially since most people pipped him to win 5-0, but Lee was just testing the water. Now he knows what he is up against, and most people at this stage did not think the AI would seriously stand a chance of winning the best of 5. He went home to analyse his game and came back the next day. In Game 2, something amazing happened. The players began and started to put stones on the board. As you can imagine, a generic strategy is to place your pieces in quite open corner-ish areas at the beginning of the game to establish some territory. This happened initially, but in Move 37 AlphaGo did something rather surprising: it played a rather central move. Many commentators, themselves 9d professionals, “thought it was a mistake” and labelled it “a very strange move”. Lee Sedol found the move perplexing, taking 15 minutes to decide a comeback – far longer than he often takes – which included a smoking break where he paced up and down to try and clear his head. This move, although quite early, led to AlphaGo taking control and Lee Sedol eventually resigned. While commentators called later AlphaGo moves “brilliant”, Move 37 was described as “creative” and “unique” by Michael Redmond (9d professional Go player). Afterwards, in the post-game press conference, Lee Sedol was in shock. “Yesterday, I was surprised,” he said through an interpreter, referring to his previous days loss. “But today I am speechless. If you look at the way the game was played, I admit, it was a very clear loss on my part. From the very beginning of the game, there was not a moment in time when I felt that I was leading.” Maybe machines have the capacity for creativity, from a human point of view, after all?

In Game 2, something amazing happened. The players began and started to put stones on the board. As you can imagine, a generic strategy is to place your pieces in quite open corner-ish areas at the beginning of the game to establish some territory. This happened initially, but in Move 37 AlphaGo did something rather surprising: it played a rather central move. Many commentators, themselves 9d professionals, “thought it was a mistake” and labelled it “a very strange move”. Lee Sedol found the move perplexing, taking 15 minutes to decide a comeback – far longer than he often takes – which included a smoking break where he paced up and down to try and clear his head. This move, although quite early, led to AlphaGo taking control and Lee Sedol eventually resigned. While commentators called later AlphaGo moves “brilliant”, Move 37 was described as “creative” and “unique” by Michael Redmond (9d professional Go player). Afterwards, in the post-game press conference, Lee Sedol was in shock. “Yesterday, I was surprised,” he said through an interpreter, referring to his previous days loss. “But today I am speechless. If you look at the way the game was played, I admit, it was a very clear loss on my part. From the very beginning of the game, there was not a moment in time when I felt that I was leading.” Maybe machines have the capacity for creativity, from a human point of view, after all?

After the second game, there had still been strong doubts among players whether AlphaGo was truly a strong player in the sense that a human might be. The third game was described as removing that doubt; with analysts commenting that “AlphaGo won so convincingly as to remove all doubt about its strength from the minds of experienced players. In fact, it played so well that it was almost scary …”. AlphaGo won this game since Lee Sedol resigned at move 176.

AlphaGo had now won the third game out of 5, meaning it had won the series. Deepmind had already said it was donating the $1 million to a variety of charities (including UNICEF). There was a sombre atmosphere in the hotel now, though. Human intellect had been defeated, in a sense. A game that was celebrated for its complexity, a game that rewarded creativity and understanding, had been “understood” by a machine so well that it was able to beat one of the smartest minds in the business. Lee Sedol returned for the fourth match with nothing to play for in terms of contest, but everything to play for in terms of pride. He had often said that he was carrying a huge burden in the sense that he was “representing humanity”. In post match conferences he felt he was “letting humanity down” with his play. While it was an amazing achievement for Deepmind to have an algorithm that could perform so well at this game, it became incredibly difficult to watch. A man who has dedicated much of his life to this game was being dismantled by a machine who only learned about Go a few months ago. In the fourth match, however, Lee Sedol approached it with a different, more aggresive, style of play. He began to attack AlphaGo’s regions on the board rather than build up territory himself and while initially AlphaGo was able to respond, a moment of brilliance happened at Move 78. He played a particularly inspired white stone to develop a so-called “wedge play” after some thought, and AlphaGo responded poorly on Move 79. A few moves after, AlphaGo had predicted its own chances of winning had fallen off a cliff. AlphaGo began to spiral, desperately trying moves that might work, but knowing that its chances of coming back were astronomically low and Lee Sedol began to take control. Eventually, AlphaGo resigned, and Lee Sedol won the game. Among Go players, the move was dubbed “God’s Touch”, with commentators describing the move as brilliant: “It took me by surprise. I’m sure that it would take most opponents by surprise. I think it took AlphaGo by surprise.”

Lee Sedol returned for the fourth match with nothing to play for in terms of contest, but everything to play for in terms of pride. He had often said that he was carrying a huge burden in the sense that he was “representing humanity”. In post match conferences he felt he was “letting humanity down” with his play. While it was an amazing achievement for Deepmind to have an algorithm that could perform so well at this game, it became incredibly difficult to watch. A man who has dedicated much of his life to this game was being dismantled by a machine who only learned about Go a few months ago. In the fourth match, however, Lee Sedol approached it with a different, more aggresive, style of play. He began to attack AlphaGo’s regions on the board rather than build up territory himself and while initially AlphaGo was able to respond, a moment of brilliance happened at Move 78. He played a particularly inspired white stone to develop a so-called “wedge play” after some thought, and AlphaGo responded poorly on Move 79. A few moves after, AlphaGo had predicted its own chances of winning had fallen off a cliff. AlphaGo began to spiral, desperately trying moves that might work, but knowing that its chances of coming back were astronomically low and Lee Sedol began to take control. Eventually, AlphaGo resigned, and Lee Sedol won the game. Among Go players, the move was dubbed “God’s Touch”, with commentators describing the move as brilliant: “It took me by surprise. I’m sure that it would take most opponents by surprise. I think it took AlphaGo by surprise.” In the post match press conference, he looked much happier that he was able to gain a small victory on the side of human intellect. In that game, towards the end, AlphaGo looked amateurish, floundering at moves that no professional would make in a desperate attempt to regain control. AlphaGo went on to win the fifth game, however, and the match ended 4-1 to AlphaGo.

In the post match press conference, he looked much happier that he was able to gain a small victory on the side of human intellect. In that game, towards the end, AlphaGo looked amateurish, floundering at moves that no professional would make in a desperate attempt to regain control. AlphaGo went on to win the fifth game, however, and the match ended 4-1 to AlphaGo.

What we learned

We learned that a machines was capable of understanding a very complex game decades before expected using new mathematical and computational ideas, but there was something interesting that came out from Moves 27 and 78. Fan Hui, who was at the event, had much thought about his own game as a result to losing against AlphaGo months earlier. He played against AlphaGo many times after, and himself described Move 27 as “beautiful”. David Silver, the lead researcher on the AlphaGo project, gave us insight into how the machine viewed the move. Based on all the information it had based on the games it had played against itself and studied from humans, AlphaGo had calculated that there was a one-in-ten-thousand chance that a human would make that move. But when it drew on all the knowledge it had accumulated by playing itself so many times—and looked ahead in the future of the game—it decided to make the move anyway. And the move was genius. It rattled Lee Sedol and ultimately helped it to win the game. This itself could be seen as a beautiful moment of creativity – deciding to play a move that humans are extremely unlikely to make because it believes that it will work (but of course, it can’t know for certain). That’s what moments of creativity reduce to in the game of Go, isnt it?

We learned that a machines was capable of understanding a very complex game decades before expected using new mathematical and computational ideas, but there was something interesting that came out from Moves 27 and 78. Fan Hui, who was at the event, had much thought about his own game as a result to losing against AlphaGo months earlier. He played against AlphaGo many times after, and himself described Move 27 as “beautiful”. David Silver, the lead researcher on the AlphaGo project, gave us insight into how the machine viewed the move. Based on all the information it had based on the games it had played against itself and studied from humans, AlphaGo had calculated that there was a one-in-ten-thousand chance that a human would make that move. But when it drew on all the knowledge it had accumulated by playing itself so many times—and looked ahead in the future of the game—it decided to make the move anyway. And the move was genius. It rattled Lee Sedol and ultimately helped it to win the game. This itself could be seen as a beautiful moment of creativity – deciding to play a move that humans are extremely unlikely to make because it believes that it will work (but of course, it can’t know for certain). That’s what moments of creativity reduce to in the game of Go, isnt it?

But what about Move 78? Why did AlphaGo start to crumble after “Gods Touch”? Was it really that good of a move? Well, here’s some beautiful symmetry: Demis Hassabis, who oversees the DeepMind Lab and was very much the face of AlphaGo during the seven-day match, told reporters that AlphaGo was unprepared for Lee Sedol’s Move 78 because it didn’t think that a human would ever play it. Drawing on its months and months of training, it decided there was a one-in-ten-thousand chance of that happening. The exact same probability. It was a move so rare that AlphaGo was simply taken aback, so it was unsure how to proceed and ultimately lost the game. It was Move 78 that allowed Lee Sedol to regain control of the match, and a move that many people and professionals didn’t expect.

What I take from this is that creativity, in all forms, can often make us reflect on our own world view. You look at a piece of art, or listen to some music, and people often describe it as “speaking to them”. Something creative can make you look at things differently, with a new appreciation. That’s why I think we have already seen creativity in AI. Fan Hui’s world view was altered as a result of losing to AlphaGo, and he became a better Go player, competitor and person because of it (quoting his own, deeply philosophical, words). But we can learn from AI, and use it as a powerful tool to solve complex problems. It forces us, too, to think about things differently but its still got a long way to go to match the power of the human brain: AlphaGo learned creativity after millions of Go games, but Lee Sedol was able to learn from AlphaGo in just one move. He said himself that he meticulously analyses all of his games, and so no doubt he would have spent hours deliberating over these, but the human brain is truly remarkable in the sense that it can learn so much from such a small sample size. It was able to recreate a moment of genius (Move 27) in Move 78 after only seeing it once, and I find that somewhat beautiful.

Lee Sedol and Fan Hui both went on to win many matches, no doubt learning from AlphaGo. In writing this (long!) blog post the Wikipedia pages that document the game were extremely useful, but I was inspired by the AlphaGo documentary, which can be found on Netflix or Prime Video (and presumably other movie streaming sites). They also have a website: https://www.alphagomovie.com/. I highly recommend you give it a watch if you’re interested, as it tells this story very well.